Table of contents

Introduction

First welcome to this blog post. I'm excited to write all about Kubernetes which is an open-source platform that helps to manage container workloads in Cloud.

Keywords

DevOps - DevOps is a set of practices that combines software development (Dev) and IT operations (Ops). It aims to shorten the systems development life cycle and provide continuous delivery with high software quality.

Container - Containers decouple applications from underlying host infrastructure. This makes deployment easier in the different cloud or OS environments.

Open-source - Open source refers to software that has been made freely distributable and modifiable. Products permit the usage of the product's content, design papers, or source code.

In short, Kubernetes is an open-source container orchestration system. It's used to automate the management, scaling, and deployment of applications. Kubernetes was first created by Google, but the Cloud Native Computing Foundation currently looks after the project.

In 2014, none other than the tech behemoth Google introduced Kubernetes for the first time. It was worked on by a few Google engineers as well as Brendan Burns, Joe Breda, and Craig McLuckie. Kubernetes was created and developed in large part using Google's Borg System. By chance, the bulk of the contributors had previously worked on the Borg. Kubernetes has emerged as one of the most exciting technologies in the world of DevOps that has gained a lot of attention from DevOps professionals. Kubernetes, commonly known as k8s is a portable, and extensible platform, for managing containerized workload and services. This container orchestration system is put to automate the deployment, scaling, as well as management of the application.

The main goal of Kubernetes is to provide a platform that makes it easier to deploy, scale, and manage application containers across a cluster of hosts. Numerous cloud providers offer infrastructure as a service (IaaS) and platform as a service (PaaS) based on Kubernetes (IaaS). Project Seven of Nine was the first moniker given to the endeavor in honor of Star Trek. The seven spokes in the logo represent that codename. The first Borg project was written in the C++ programming language. However, Go is used to implement the revised system. In 2015, Kubernetes v1.0 was made available for usage. Along with the release, Google chose to collaborate with the Linux Foundation.

How does it work?

The Kubernetes has a primary/replica architecture. Kubernetes architecture consists of a lot of components. These components can be divided into the ones that manage an individual node, and the others are a part of the control plane. It is essential to understand the architecture if you wish to learn Kubernetes.

Pod: This unit in Kubernetes architecture groups together containers that must work together for an application.

Cluster: Multiple nodes are grouped together in a cluster in Kubernetes.

Node: node is a computer that launches workloads or containers. It is also known as a Minion or a Worker. Each cluster node must run a container runtime such as Docker. Aside from that, it necessitates a number of other components for simple connection with the primary, as well as network setting of these components. Other components include:

Kubelet: A kubelet is a component that ensures that each node is in a running state and that all the containers are healthy. The process of stating, stopping, and maintaining application containers is taken care by kubelet. It monitors the state of the pod, and if they are not in the desired state, the pod re-deploys the same node.

Kube-proxy: The Kube-proxy handles the implementation of a load balancer and a network proxy. The primary aim of this component is to route traffic to the right container on the basis of IP and port number.

Container runtime: A container resides inside the pod. A container is the lowest level of micro-services. It ideally holds running applications, libraries, and their dependencies.

Kubernetes Control Plane

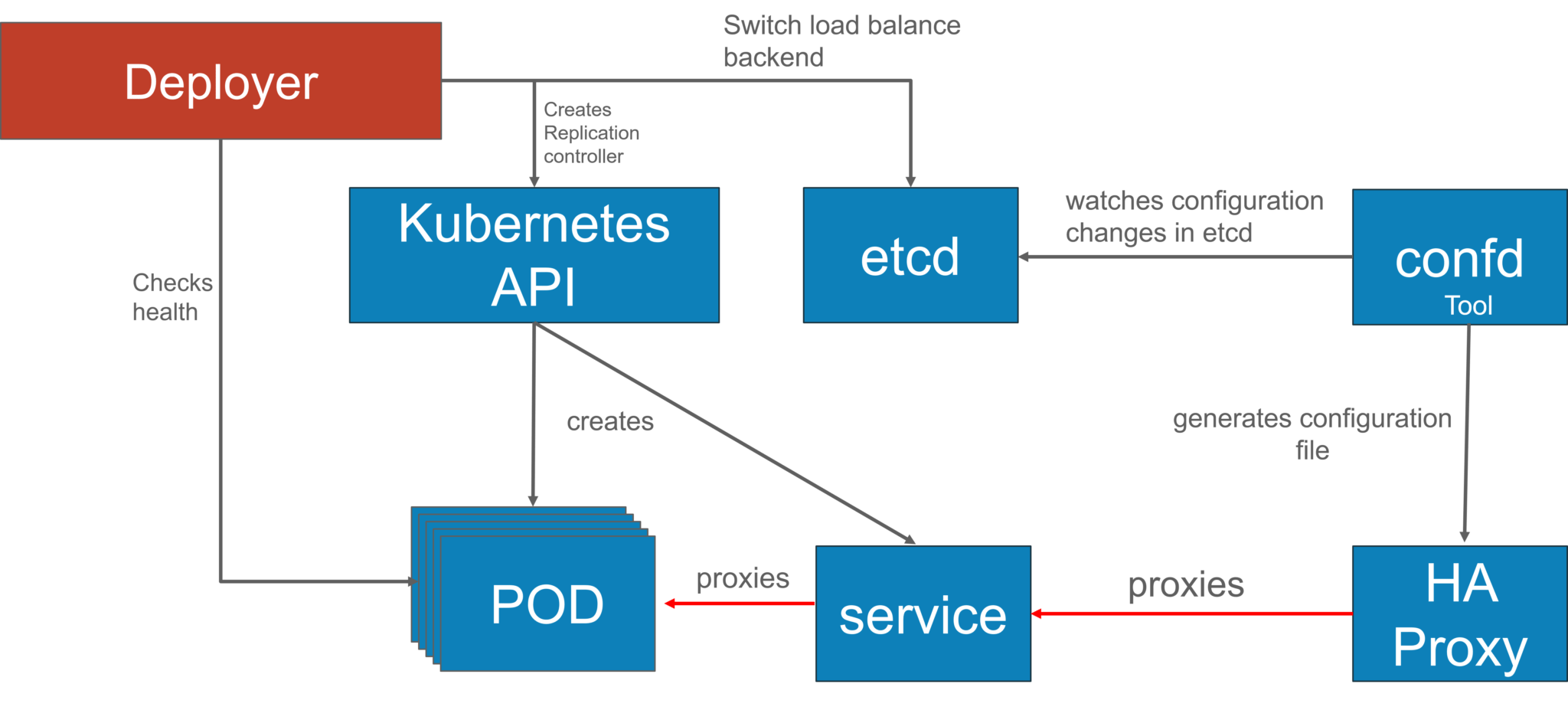

The control plane hosts the Kubernetes components that offer essential functions such as exposing the Kubernetes API, scheduling workload deployments, controlling the cluster, and directing communications throughout the whole system. The head, as shown in the second diagram, monitors the containers operating in each node as well as the health of all registered nodes. Container images, which serve as deployable artifacts, must be made available to the Kubernetes cluster via an image registry, either private or public. The container runtime allows the nodes in charge of scheduling and running the apps to access the images from the registry. The various components are:

Scheduler: The scheduler determines which node should perform each task based on its evaluation of resource availability, and then monitors resource use to ensure the pod does not exceed its allotment. It keeps track of resource requirements, resource availability, and a range of additional user-supplied limitations and policy directives, such as quality of service (QoS), affinity/anti-affinity requirements, and data localization. A resource model can be defined declaratively by an operations team. These statements are interpreted by the scheduler as instructions for providing and assigning the appropriate set of resources to each job. It is the duty of the scheduler to make sure that the workload is not scheduled in excess of the available resources.

etcd: etcd is a data store that a CoreOS can develop. It is lightweight, persistent, distributes, and stores key-value configuration data. etcd is a key-value data store that is durable, lightweight, and distributed, and it stores the cluster's configuration data. It is the one source of truth, representing the overall state of the cluster at any given point in time. To keep an application in the intended state, several additional components and services monitor changes to the etcd store. That state is described by a declarative policy, which is essentially a document that specifies the ideal environment for that application so that the orchestrator may strive to achieve that environment. Only the API server has access to the etcd database. The API server is used by any component of the cluster that wants to read or write to etcd.

API Server: The API server exposes the Kubernetes API by means of JSON over HTTP, providing the representational state transfer (REST) interface for the orchestrator’s internal and external endpoints. The CLI, the web user interface (UI), or another tool may issue a request to the API server. The server processes and validates the request, and then updates the state of the API objects in etcd. This enables clients to configure workloads and containers across worker nodes.

Controller Manager: The controller keeps nodes and pods steady by continually checking the health of the cluster and the workloads running on it. For example, if a node falls ill, the pods that run on it may become unreachable. In this instance, it is the controller's responsibility to plan the same amount of fresh pods in a different node. This action guarantees that the cluster remains in the expected condition at all times. A controller is a reconciliation loop, that works to drive the actual cluster state towards the desired cluster state. It is ensured by the management of the set of controllers.

Well, I hope you have grasped a little bit about Kubernetes and in the next part we will look at the architecture in depth and get our hands on the game.